动态加载

Notes

异步加载数据是由JS控制 比如往下翻才显示图片

inspect -> network->xhr里面查看request.获取url

新浪微博评论/豆瓣影评

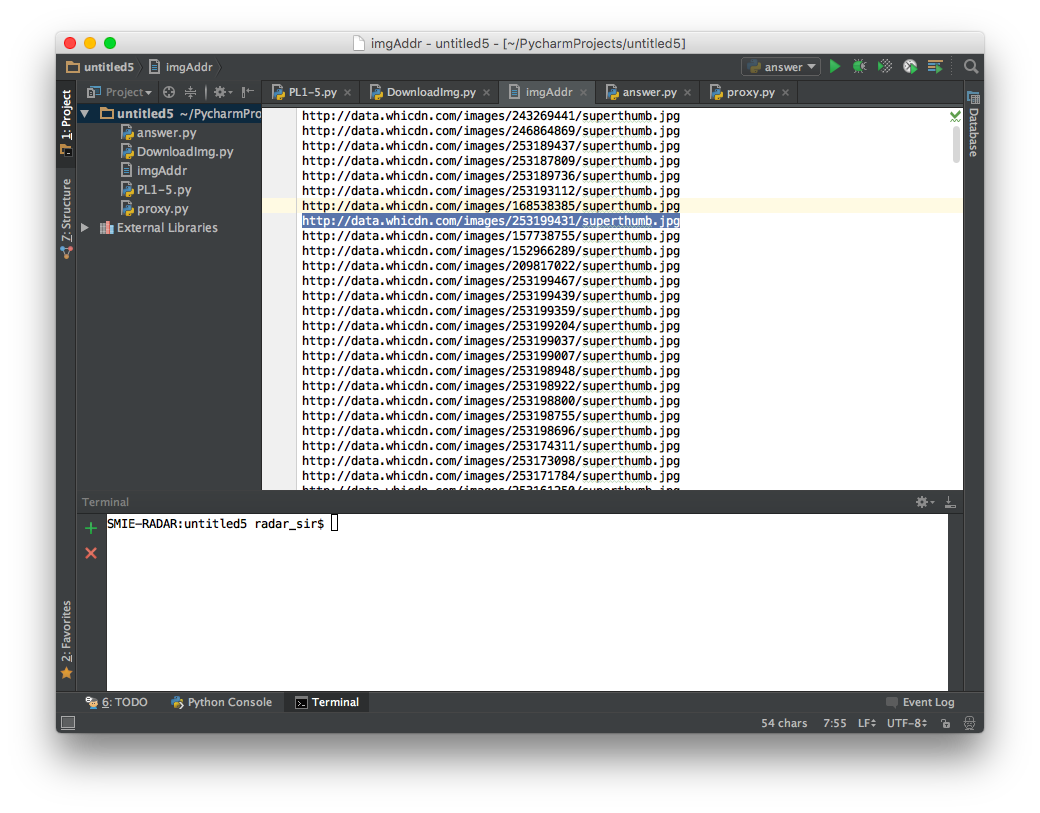

Task: 爬取美眉照片 效果展示 没成功下载下来只有一堆地址咯

这种图片网一般有反爬虫机制吧..answer Code也没有成功爬下图片

Code 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 from bs4 import BeautifulSoupimport requestsimport timeurl = 'http://weheartit.com/inspirations/taylorswift?scrolling=true&page=' address = open('imgAddr' ,"w" ) for i in range(1 ,10 ): time.sleep(3 ) wb_data = requests.get(url + str(i)) soup = BeautifulSoup(wb_data.text, 'lxml' ) imgs = soup.select('img.entry-thumbnail' ) for p in imgs: print(p.get('src' ),file = address) address.close()

DownloadImage 存下来的地址没有成功下载照片QAQ…

但是下了百度首页的照片玩玩…

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 import urllib.requestimport osimport timedata = open('imgAddr' ,"r" ) data.seek(0 ) save_Path = "/Users/macbook/desktop/p/" os.chdir(save_Path) def DownloadImage (url = None,file_name = None) : time.sleep(5 ) if (url != None ): print('DownLoading' ,url) urllib.request.urlretrieve(url, file_name) for each_URL in data: DownloadImage(each_URL,each_URL.replace( str('/' ) , str('_' ) ) )

总结 没有下载到图片莫名遗憾, 技术长进空间很大啊..

其实就是因为没看懂post…怎么搭怎么选QAQ, 去shadowsocks翻的墙并不能理解是怎么用的…

另外answer中对于名字的处理值得借鉴

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 def download (url) : r = requests.get(url, proxies=proxies, headers=headers) if r.status_code != 200 : return filename = url.split("?" )[0 ].split("/" )[-2 ] target = "./{}.jpg" .format(filename) with open(target, "wb" ) as fs: fs.write(r.content) print("%s => %s" % (url, target))

<

python实战计划 爬取商品数据

python实战计划 静态网页爬取

>